Visually Enhanced Mental Simulation (VEMS) is a simulation technique that combines mental simulation and think-aloud with flat plastic representations of a patient(s) and relevant assessment and treatment adjuncts. It offers simple, effective and efficient education for healthcare professionals, but there is a paucity of guidance on effective VEMS design and delivery. We aimed to explore facilitator and participant perceptions of VEMS at our institution to inform guidance for facilitators and simulation program leaders.

Using a constructivist approach, we conducted an exploratory qualitative study of the experience of VEMS participants and facilitators. The VEMS simulations at our institution ranged across community nursing, medical and surgical wards, geriatrics, emergency department, maternity and intensive care. Interviews were used to collect data on the design, delivery, experience and impact of VEMS. We analysed the data thematically, from the stance of researchers and practitioners embedded in the institution and seeking to improve our simulation delivery.

Thirteen interviews were conducted. Study participants’ experience with VEMS ranged from one or two sessions to more than 50 sessions. The context of VEMS experience was mostly interprofessional team-based simulation in diverse hospital or community settings. We identified five themes through our data analysis: 1) Flexibility and opportunity, 2) Unexpectedly engaging, 3) Sharper focus on teamwork, 4) Impact on simulation practice and programs and 5) Manikins are confusing.

VEMS is a feasible and flexible simulation modality in a health service where time and cost are at a premium. It was perceived as easier to deliver for facilitators with less technical simulation experience, and widely applicable to the diverse range of clinical situations faced by our healthcare teams. Participant engagement appeared to be easier to achieve than with manikin-based simulation and this has encouraged us to critically reconsider our modality choices for simulation within our health service.

What this study adds

Visually Enhanced Mental Simulation (VEMS) can be a simple, flexible and effective technique for the education and training of healthcare teams.

Learner engagement with VEMS can be surprisingly easy.

Engagement and learning impacts can be enhanced through careful scenario design, teamwork focus, active facilitation of scenario delivery and alignment of artefacts with learning context.

The effectiveness and simplicity of VEMS underline the need to critically evaluate and justify simulation modality choices.

Visually Enhanced Mental Simulation (VEMS) is a simulation technique that promises simple, effective and efficient education for healthcare professionals, but there is a paucity of guidance on effective VEMS design and delivery. This lack of guidance may deter facilitators for whom the modality would be well matched to their objectives, and hampers the potential impact of the technique. We explored facilitator and participant perceptions of VEMS at the Gold Coast Hospital and Health Service (GCHHS) to inform guidance for facilitators and simulation program leaders using this modality.

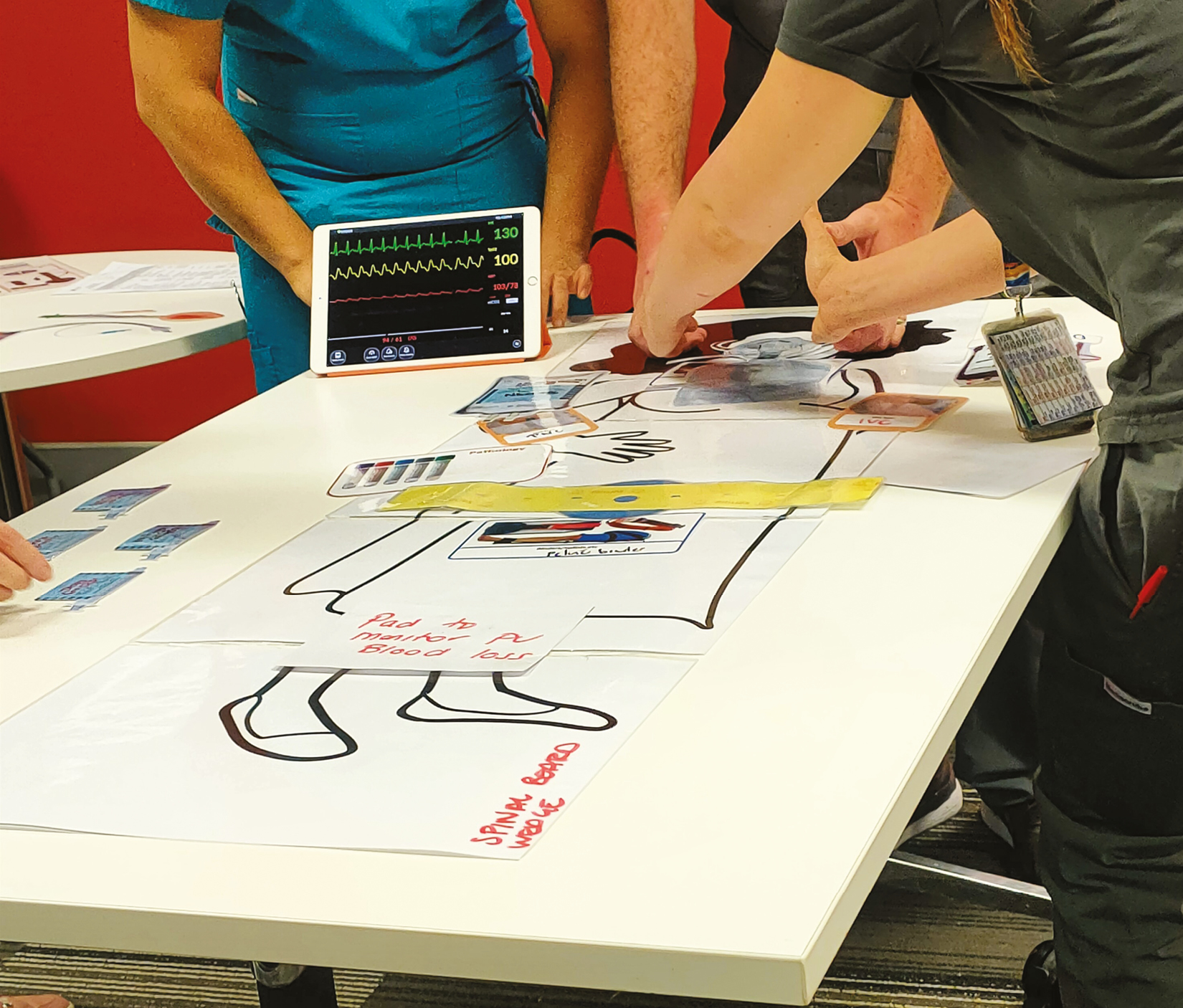

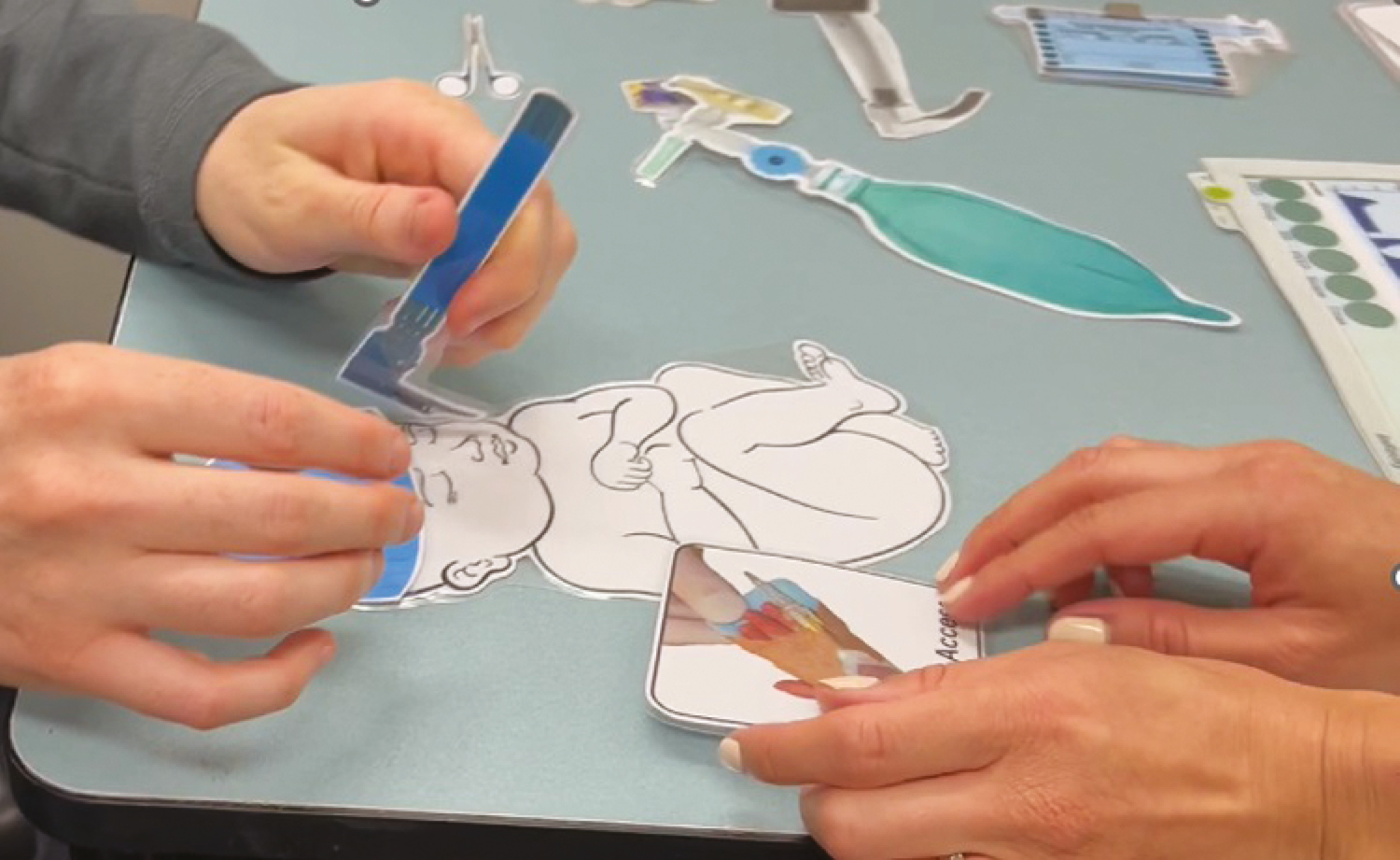

VEMS is ‘a combination of mental simulation and think-aloud with external representations of a patient and the treatments applied by the participants’ [1]. Practically, this technique has similar features to most simulation-based education (SBE) – healthcare teams practise their work together in carefully designed scenarios to care for ‘patients’ and reflect on their performance in a subsequent debriefing. However, in a VEMS format, the patient and the equipment for assessment and management of the patient are flat plastic representations (Figures 1 and 2), like a tabletop exercise (TTX), rather than manikins or simulated patients (SP) [2,3]. This makes VEMS more feasible to deliver in a short time frame and with limited resources. It may be less confronting for some participants and there is some (limited) evidence of effectiveness [4]. The educational basis for this design is the well-documented benefits of mental rehearsal [5] and of ‘think-aloud’ approaches [6] to explore cognitive processes and practise decision-making, communication and teamwork.

VEMS for emergency department trauma care

VEMS for neonatal intubation

The relative simplicity of VEMS delivery has led to increasing use of the technique at our institution and in the wider simulation community. However, simulation facilitators lack guidance for the optimal design and delivery of VEMS. Most published guidance is drawn from expert opinion and experience [2,3,7] or inferred from the wider SBE literature [8], and from tabletop exercises (TTX) in particular [9,10]. Key questions remain unanswered, including the optimal role of VEMS in a wider simulation-based educational strategy and the optimal design and delivery techniques. VEMS participant and facilitator experience has not been explored.

Evaluating the effectiveness of SBE techniques used is critical in a health service that directs resources towards any educational approach, including VEMS. Training within the health service context must be time- and cost-efficient, balancing value added with time away from clinical duties. Our starting point is that the features that lead to effective learning from SBE have been broadly described [8]. These include thoughtful design and delivery [11,12], clear learning objectives [13], alignment of simulation modality to those objectives, and expert-led pre-briefing [14] and debriefing conversations [15,16] to support reflective practice. Without these elements, there may be unintended harms from SBE, including psychological harms [17], physical safety risks [18], and perpetuation of harmful biases and stereotypes [19]. We draw upon these influences on SBE effectiveness to frame our exploration in this study, and to structure our more granular elaboration of those elements for VEMS practice.

Our research aim was to explore the experience of participants and facilitators engaged in VEMS in our acute care health service context. Specifically, we sought to understand a) factors in VEMS design and delivery that promote participant engagement, b) factors that support optimal educational outcomes, as perceived by facilitators and participants, and c) factors that influence the transfer of lessons learned in VEMS to clinical practice.

Our description of the study team, methods and results is guided by published Standards for Reporting Qualitative Research [20]. Using a constructivist approach, we conducted an exploratory qualitative study of the experience of VEMS participants and facilitators at the GCHHS. Through this constructivist orientation, we don’t seek an objective, singular truth, but rather our evolving insights are constructed by the researchers and study participants. We were influenced by a Kerin’s [21] categories of “Design”, “Experience” and “Impact” in structuring our approach to simulation program evaluation.

Cognizant of the tension in educational evaluation between ‘proving’ versus ‘improving’ [21,22], we are oriented squarely towards the latter. The effectiveness of SBE is affected by a wide range of participant, facilitator and contextual factors, making direct experimental comparisons of simulation techniques relatively unhelpful. More useful is to explore factors influencing what ‘works’ for whom, in what circumstances, in what respects and how? [21]. Through this lens, facilitator and participant experience is a helpful source of practical, granular guidance for optimizing simulation design and delivery [23].

Context is critically important in any educational intervention, and hence we describe our clinical environment and simulation context in some detail. The GCHHS operates two major hospitals, a day procedure facility and community health services. Services include all major adult specialties as well as paediatrics, and tertiary care capabilities in trauma, cardiothoracic medicine and critical care. GCHHS employs nearly 10,000 staff.

The Simulation Service is highly integrated within the health service operations, and prioritizes team and organizational learning within a translational simulation approach [24]. There is significant pressure to minimize the impact of simulation activities on service provision, including limited time for staff to attend simulations. The service is staffed by four dedicated simulation educators with nursing professional backgrounds, supported by an assistant director of nursing and a medical director. Simulation activities include educational workshops and courses (approximately 30% of activity) and in situ simulations focused on team and system improvement (approximately 70%). There is an active faculty development program for staff interested in leading simulation activities across the health service, with more than 30 workshops a year on design and delivery of simulation, technical aspects of delivery and debriefing.

VEMS was introduced as a modality into the simulation program at GCHHS in 2022 following description of the technique by Dogan [1]. The uptake by educators has been rapid, with VEMS now used in simulations ranging across community nursing, medical and surgical wards, geriatrics, emergency department, maternity and intensive care. In response to this interest, we have been conducting VEMS faculty development workshops since 2023. There are now more than 100 VEMS sessions per year, mostly additional to (rather than substituted for) our manikin and SP-based simulation activities.

VB is Medical Director of the Gold Coast Health Simulation Service, with more than 20 years’ experience in healthcare simulation and scholarship, including a PhD in translational simulation. She is an emergency physician. VB regularly conducts VEMS session within GCHHS and leads faculty development sessions on this topic at GCHHS and in other institutions. EP is an emergency physician and anthropologist, with extensive experience in healthcare simulation and qualitative research. She facilitates VEMS sessions, including faculty development, at GCHHS and in other clinical settings. CSp is an emergency physician at GCHHS and lead for simulation in the emergency department at the institution. He regularly facilitates VEMS sessions. JS is a simulation educator at GCHHS and a registered nurse with an anaesthetic background. CSc is a simulation educator at GCHHS and a registered nurse with an emergency medicine background. CSc and JS conduct VEMS sessions at the institution and support other educators using the modality with their teams. Our data analysis and discussion are influenced by our positioning as facilitators of VEMS sessions, and combined experience of many years of simulation practice. We consider this a strength of this study and report our reflexivity throughout the results and discussion.

Recruitment of study participants was pragmatic. All facilitators and learners who had engaged in the design, delivery and debriefing of VEMS sessions at GCHHS since 2021 were eligible to participate. Potential study participants were identified through the GCHHS Simulation Service attendance records and through medical and nursing educational leads who are members of the Simulation Service SharePoint group. Invitations to participate in the study were distributed via email, with information about the study process.

After registering interest and providing consent, interviews were scheduled at a convenient time for study participants and conducted either in person at a Gold Coast Health site, or via Microsoft Teams, and were audio recorded.

Interviews were conducted by VB and EP using a semi-structured interview guide (Appendix 1), with questions oriented towards design, delivery and impact of the VEMS sessions. Additional concepts were explored if raised by participants and relevant to the overall study aims.

Audio recordings were transcribed using otter.ai (https://otter.ai) and then reviewed and cleaned for transcription errors. Participants were emailed a copy of their transcript and could withdraw up to 1 week after this.

We analysed the data to identify themes, using reflexive thematic analysis based on Braun and Clarke’s six-step approach [25]. All members of the research team familiarized themselves with the data set, and each member of the team was then allocated three specific interviews to undertake line by line open coding independently before meeting to compare findings. All interviews were assigned to at least one but up to two reviewers. From this initial meeting, key organizing concepts were identified, and possible themes and subthemes were generated. The team reflected on the data and on their positioning as simulation facilitators and as colleagues of some of the study participants, as well as their own experience facilitating VEMS sessions. We aimed to achieve richer interpretations of meaning through our analysis by multiple researchers, rather than attempting to achieve consensus. We discussed whether sufficient data had been gathered at this point, and decided that no further interviews were needed, given the consistency of concepts we had identified in the data. Each team member was then allocated three different interviews to analyse, sensitized by the draft themes, but open to additional concepts that might be identified. The team met again and refined the themes and subthemes. VB then re-coded the whole data set using NVivo14 software (QSR International), oriented by those themes and subthemes, and identified illustrative quotations from study participants.

Thirteen interviews were conducted. Three interviewees had been learners in VEMS sessions, seven had been facilitators, and three participants had experienced both facilitator and learner roles. Study participants’ experience with VEMS ranged from one or two sessions to more than 50 sessions. The context of VEMS experience was mostly interprofessional team-based simulation in hospital or community settings, including teams in the emergency department, neurology, maternity, medical and surgical wards, intensive care, paediatrics and community nursing. The duration of interviews ranged from 19 to 37 minutes, with an average of 27 minutes. No participants withdrew from the study after being enrolled.

We identified five themes through our data analysis: 1) Flexibility and opportunity, 2) Unexpectedly engaging, 3) Sharper focus on teamwork, 4) Impact on simulation practice and programs and 5) Manikins are confusing. Each of these themes are presented here, with subthemes where relevant, and with illustrative quotations. Table 1 offers a succinct illustration of the themes and subthemes. We have integrated interpretations and explanations of our findings alongside the presentation of data itself, weaving in analysis and commentary about the themes and patterns that we identified, rather than strictly presenting raw facts without context in the results section alone.

| Themes | Subthemes |

|---|---|

| 1. Flexibility and opportunity | |

| 2. Unexpectedly engaging | • Easier engagement for learners • Facilitator strategies |

| 3. Sharper focus on teamwork | |

| 4. Impact on Simulation practice and programs | • Feasibility advantages • Scaffolding learning • Risk and missteps • Faculty support |

| 5. Manikins are confusing |

1)Flexibility and opportunity

The VEMS modality influenced facilitators’ approach to simulation design. Without the constraints of manikin functionality or safety concerns with (human) simulated patients, educators were free to embrace topics and issues beyond their usual simulation practice. Flat plastic patients with zero physical realism lent themselves to wider range of interpretations and hence clinical scenarios.

Facilitators described being freer to embrace flexible formats including ‘pause and discuss’, and to hybridize their VEMS with part task trainers. They found it easier to pair flat plastic VEMS patients with part task trainers or specific clinical equipment if focused skills or tasks were required for educational aims, e.g. pumps, renal dialysis machines. This afforded a wider scope of practice to be simulated, without concerns about whether a manikin or SP could ‘do’ it. Sensing what a group needed was then easier to transform into a simulation experience.

the scenarios weren’t specifically about how to spike a bag of blood because we know how to do that. [P13]

However, some cautioned that this flexibility was a double-edged sword, and that it was important to maintain discipline about the simulation objectives. One gave the example of bag valve mask (BVM) ventilation, and VEMS being well suited for the decision-making to employ a BVM but not the technical motor skill to do the task.

you need to know when to use a bag valve mask, you also need to know how to use it, If you need to know how to use it, well, then we should probably use one for real and other practice to get the feel of it…. [P4]

There was a dominance of teamwork objectives in VEMS session design, as described by both facilitators and learners. This may also be influenced by our GCHHS simulation context of simulation being employed for practising interprofessional teams. VEMS was described as well suited to practising team coordination, task prioritization, communication strategies and leadership skill development. This was perceived as a sharper focus when learners didn’t need to focus on technical skills. This in turn led to facilitators working hard to have realistic team composition in their VEMS sessions.

You really see people like in this instance developing that really clear shared mental model of what teams look like, what good communication looks like, and what good leadership and followership looks like. [P11]

2)Unexpectedly engaging

Facilitators and learners found the engagement barriers lower, which was a surprise to both groups. Facilitators adopted various specific techniques for supporting immersion in a patient case with almost no physical realism.

And then seeing that the impact that it can have and the level of engagement… going ohhhhh, wow, that was a surprise. [P11]

i)Easier engagement for learners

Without the ‘distraction’ of a manikin, learners could more readily fill in reality gaps and immerse themselves. Learners appeared to have less fear and less confusion about the patient condition, compared to ‘second guessing’ manikin cues.

it’s easier to make that disconnect between what’s real and what’s fake. And I think sometimes with the high fidelity simulations that lines are little more blurred. [P8]

Learners described their strategies for conjuring mental representations of the encounter based on prior experience.

it’s just pretending like you are in that resus 2 then what would you do if this situation came in? [P2]

Some respondents found this high level of engagement hard to understand. Facilitators and participants could see (and feel) the strong engagement but were searching to explain why that was.

I think it I found it a bit more relaxing, compared to an actual simulation for some very odd reason [P5]

when I first saw them … I was like, people were not going to engage with this process, but something that really like rang true to me was that uncanny valley. [P3]

ii)Facilitator strategies for engagement

Facilitators described using familiar strategies for learner engagement drawn from their broader simulation practice, and some they had specifically employed for VEMS. They described that active involvement in the simulation delivery process, careful preparation of the learners for the VEMS experience and thoughtful use of visual adjuncts influenced engagement

Facilitators were clear: VEMS doesn’t run itself. Facilitators had to be actively looking for participants seeking cues to patient condition, etc. and be ready to provide them quickly and unambiguously during scenario delivery. This judgement required facilitators to have knowledge of the learner context and of the critical information for decision-making in the simulated encounter. These extended to demonstrating a response to decisions made or actions taken, e.g. giving oxygen leads to improvement in oxygen saturations. This generally involved the facilitator standing close to the patient ‘bedside’ with the learner team.

So paying close attention to what they were doing to try and anticipate actions to be trying to be quick with giving the VEMS feedback to be able to progress the scenario. [P3]

Careful learner preparation for VEMS involved many of the well described practices for pre-briefing: clear objectives, time spent gaining rapport with the learner group and strategies to attune to and support psychological safety [26,27]. More specific to VEMS, practical visual familiarization to the learning space and process was powerful. Clarity about the level of physical realism and how cues about patient condition would be provided was perceived to be important. This familiarization activity also set a tone of engagement by having facilitator proximate and involved. There were suggestions that this visual orientation and explanation was so powerful that a video illustration of the process would be a useful addition to learner pre-reading.

I think getting them into the space to see it because it’s visual, rather than just throwing them into it. They need to see where all the drugs are laid out, the laminated cards, … Yeah, because it’s visual. Yeah. And they’re picking it up really quickly, actually. [P13]

And I think coming from the facilitator side that professionalism and buy in from you helps them to then buy in as well. [P8]

Most facilitators had a ‘VEMS kit’ of flat plastic patient representations and a variety of laminated visual representations of clinical treatment adjuncts (e.g. IV cannulas, airway equipment, blood collection tubes, syringes). Other engagement artefacts included monitor emulators and marker pens for participants. Facilitator emphasized that the choice of these adjuncts was based on functional task alignment with the scenario objectives and with the learner group context, and their aim to keep it simple and feasible

And so, like, we had a community bag, everything they would have used was made into a VEMS equipment. [P9] (Community nursing group)

I think you’ve gotta be careful with them not to go crazy and have like, every single piece of equipment that you possibly may need. So I just try and keep it really simple and keep that printing budget low. [P3]

3)Sharper focus on teamwork

Participants described impacts on their confidence, clinical knowledge and teamworking skills, with a preponderance of the latter. Without the element of procedural skill performance, learners and facilitators focused on team-based decision-making.

the thing that I have really enjoyed with these VEMS … when there’s a kind of a grey decision-making area who’s going to do what when you’re going to do it, what’s your tipping point? And to have those round the room conversations and that kind of engages more people in deciding, [P12]

Aligned with broader experience with interprofessional team training, these sessions also appeared to have a team bonding impact, increase team familiarity and even shift towards a learning culture for the teams.

we get to work with the nurses who are on the floor. So I got to know more about the nurses. They get to know more about me and I find that we tend to have a little more of a closer relationship now afterwards. [P10]

VEMS appeared to engender habits ‘thinking aloud’ for team members, especially team leaders, prompted by the need to describe physical states and that couldn’t be seen on flat plastic patient representations, and by the lack of ‘distractors’ such as actually having to do procedures. Facilitators appreciated this element for their subsequent debriefings, as underlying frames for action were more often revealed during the simulation.

So yeah, so it was a good experience in that way because it forced me to think just think out loud but in a structured way. [P10]

4)Impact on simulation practice and programs

i)Feasibility advantages

The rapid uptake of VEMS at our institution appears to be largely due to feasibility advantages – simple and quick at the point of delivery, less stressful for facilitators and relatively free to technical challenges. The less stressful nature of VEMS made staff attendance easier to achieve, and opened the simulation delivery to a wider group of facilitators who have sounds educational skills but who were less ‘tech-savvy’.

Logistically, it is much easier to get people to see this as something that is achievable, particularly in time pressured settings or settings where we don’t have a lot of technical affinity. [P11]

ii)Scaffolding learning

Facilitators sometimes used VEMS to scaffold ‘up’ to immersive or manikin-based simulations, either within a single day course or as part of a comprehensive, multifaceted simulation program. They also described VEMS as a layer up from skills stations and part task training practice, where VEMS was the integrating/ ‘putting it together’ elements of an education program. This was sometimes applied for more junior learners, or those transitioning to a higher clinical role for whom the breakdown of task elements results was better aligned to their leaning goals.

progressed to some simulations that use simulated in a more traditional kind of immersive simulation modality [P11]

iii)Risks and missteps

Facilitators engaged in trial and error with this novel modality and identified risks and missteps in their VEMS practice. The feasibility advantages at the point of delivery could be falsely extrapolated to easier design and preparation which was not the case.

there’s a general idea that VEMS is simple, and it’s kind of simple at the point of delivery, but … you have lots of effort put in at the point of planning and organising. [P1]

A focus on decision-making versus task execution could risk a ‘doctor focus’ in sessions. Time compression for procedural elements could send unintended messages about these tasks being quick and easy.

that time warping can really occur where suddenly you can manage an entire MHP [Massive Haemorrhage Protocol] and an entire resus medication role with one or two nurses, and you’re going well, that’s not reflective of reality. [P11]

Hybridization (with some laminated and some real elements) could be taken too far and remove many of the feasibility advantages if not thoughtfully applied. Representation of patients as black and white silhouettes without a voice risks objectification of healthcare consumers and their role in their care. Conversely, one team planned to use an enlarged photograph of one of their own children as the VEMS model before realizing the potential harms. These missteps highlighted the need for thoughtful design and delivery and for sharing lessons with the community of practice.

iv)Faculty support

Most facilitators described support from the Simulation Service, peer mentoring and the institutional VEMS faculty development workshops as their sources of guidance about how to design and deliver VEMS effectively. They also identified support for staff attendance from nursing and medical unit leaders as crucial for success. Facilitators offered suggestions for other adjuncts such as instructional videos to visualize VEMS session before they embarked upon using the modality.

5)Manikins are confusing

Although not a focus of our exploration, both facilitators and learners made many comparisons between VEMS and other modalities of learning and of simulation-based training. Delivering and/or participating in VEMS was compared to manikins, mental rehearsal, embedded simulated patients, case-based discussions and even didactic PowerPoint sessions as educators sought to tease out the appropriate place of VEMS within their programs.

We were startled by the comparison with manikins, and the strong message that it was much easier to engage with VEMS. Manikins were described as confusing and unreliable. We heard descriptions of how learners often spent time and energy trying to check if cues from manikins were those intended by facilitators, whereas those cues provided in VEMS were immediate and trustworthy. The fear of trickery [28] (an omnipresent fear despite our best efforts as a simulation program to never trick participants) was reduced.

Think I quite like the flat plastic a lot more than the manikin and think because we’re so used to the manikin sometimes not doing what we expect it to do or not completely trusting the mechanics of the manikin… we still feel the need to sometimes double confirm e.g. that to say air entries both equal away … whereas with the plastic is just more direct with communication and it removes that doubt of am I hearing or what am I examining is wrong. [P5]

Our exploration of participant and facilitator experience with VEMS has explained its increasing popularity within our institution and provided some granular insights into effective design and delivery practices. VEMS is a highly feasible simulation modality in a health service where time and cost are at a premium. It is perceived as easy to deliver for facilitators with less technical simulation experience, and more widely applicable to the diverse range clinical situations faced by our healthcare teams. Participant engagement appears to be easy to achieve and can be enhanced by good participant preparation and active facilitation of sessions. There are potential risks and unintended consequences, as with all SBE, and these can be mitigated through careful design and delivery. Drawing on these insights, and synthesized with guidance from the literature on SBE best practice, we provide recommendations for simulation programs and practitioners using VEMS in Figure 3.

Recommendations for the design and delivery of VEMS

We have focused our further discussion in this section to 1) our stance on the generalizability of this work, and 2) the counterintuitive finding of better participant immersion with VEMS than manikin-based simulation. Our insights are drawn from a single institution, but from a wide range of clinical contexts. The ‘VEMS-specific’ design and delivery insights align broadly with published SBE best practice and are likely transferable beyond our institution. Our research team is highly engaged in simulation, including VEMS, at GCHHS. We have worked with many of our study participants (as learners or co-facilitators) in VEMS sessions. This influences our interpretation of the data through an ‘insider’ stance, and explains our strong motivation to seek improvement, rather than proof of VEMS advantages. Identifying risks, missteps and unintended consequences in simulation practice is under-represented in SBE literature [21], and we are pleased that some of our findings are pitfalls to avoid.

Our interest is piqued by the conversations related to engagement and the seemingly paradoxical finding of better immersion with VEMS – with zero physical realism – compared to high technology manikins. Participants prefer and are less likely to be confused by a facilitator telling them the size and reactivity of pupils on a flat plastic patient than by checking them with a pen light on a high technology manikin only to turn to the ceiling and ask, ‘am I supposed to be seeing reactive pupils?’. This demands more intense exploration. It has made us reconsider when and how we use manikins in simulation and the strategies we need to overcome this intense and cognitively draining confusion.

Participant immersion – the extent to which learners feel engaged and absorbed in simulation – has been well studied [29-31], but appears to be poorly applied to what we observe in practice. The literature offers us thoughtful explorations of ‘double intentionality’ – participants shifting focus between the simulation’s artificiality and professional learning goals [31], useful terminology such as ‘mimetic experience’ (acting ‘as if’ it were real) [30,31] and distinctions between reality cues (elements that enhance realism and immersion) versus fiction cues (elements that highlight the simulation’s artificiality) [30]. A carefully designed Immersion Score Rating Instrument (ISRI) offers a structure for exploring the nuance of participant engagement [29]. And yet we observe in practice (and are guilty ourselves of) a default to manikin-based simulation and blithe pronouncements to lean into the unreality. We suggest simulation programs should critically question their simulation design and modality choices based on deeper evaluation of their participants immersion.

VEMS is a flexible, feasible simulation technique with good engagement and positive impacts on practice if thoughtfully designed and delivered. We found VEMS well suited to practising teamwork and task coordination, and to have a place within scaffolded learning programs at the department or health service level. We encourage simulation practitioners to critically consider their choice of simulation modalities and embrace and address unintended consequences of those choices.

None declared.

VB and CSp conceived the study idea. VB and EP designed the study and VB drafted and managed the ethics submission, with the input from all authors. VB and EP collected data. All authors were involved in data analysis. VB drafted the manuscript, with review by all authors. The final manuscript was approved by all the author team.

No funding was received for the study.

None declared.

The study was approved by the Gold Coast Hospital and Health Service Human Research Ethics Committee (HREC/2024/QGC/105261). All study participants gave informed consent.

All authors are employed by Gold Coast Hospital and Health Service. They have no other disclosures.

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

22.

23.

24.

25.

26.

27.

28.

29.

30.

31.

What is your role in the health service?

How much experience have you had with VEMS specifically and SBE more generally?

Tells us about a recent VEMS experience you were involved in.

Prompts

–What was the purpose/context?

–Who was involved?

–What was your role?

Engagement

What worked to support the engagement of participants?

Prompts

–Design – scenario content/objectives

–Delivery = facilitator approach/pre-briefing, etc.

–Prior expectations/participants of attendees

What barriers were there to engagement?

Design

Tell us about your VEMS design and delivery, and why you do it that way.

E.g. patient(s) – how were they portrayed and why?

(e.g. black and white silhouette, photograph/image, actor)

E.g. What adjuncts were used?

(e.g. monitoring, drugs, interventions, assessment adjuncts, ECGS, etc.)

Effectiveness

How effective was the VEMS session as a learning activity? (for individuals/team/organization)

–Why or why not?

–How did you know?

On reflection, how well did the VEMS technique match the objectives of the session?

In your general experience of VEMS facilitation

What do you think works for pre-briefing for VEMS session?

What do you think works for debriefing for VEMS sessions?

What advice would you give to facilitators who are planning to design and deliver VEMS sessions?

What support/ faculty development do you feel is most helpful to support your role in facilitating VEMS?

What is your role in the health service?

How much experience have you had with VEMS specifically and SBE more generally?

Tells us about a recent VEMS experience you were involved in.

Prompts

–What was the purpose/context?

–Who was involved?

–What was your role?

How effective was the VEMS session as a learning activity for you/your team?

–Why or why not?

–What does ‘effective’ mean to you?

What worked to support your engagement in the activity?

Prompts

–Design – scenario content/objectives?

–Delivery – facilitator approach/pre-briefing, etc.?

–Prior expectations/experience?

What barriers were there to engagement?

Did you receive pre-reading and was it helpful?

How was the pre-briefing conducted and what effect that have on your participation?

How was the debriefing conducted and what effect that have on your participation?

Can you describe an example where you have applied something you learnt in VEMS in real clinical practice?

What advice would you give to facilitators who are planning to design and deliver VEMS sessions?

What advice would you give to participants prior to them attending a VEMS session, so they can get the most out of it?